Sunday, 24 August, 2025

Gmail Phishing Attacks Now Leverage AI Prompt Injection to Outwit Defenses

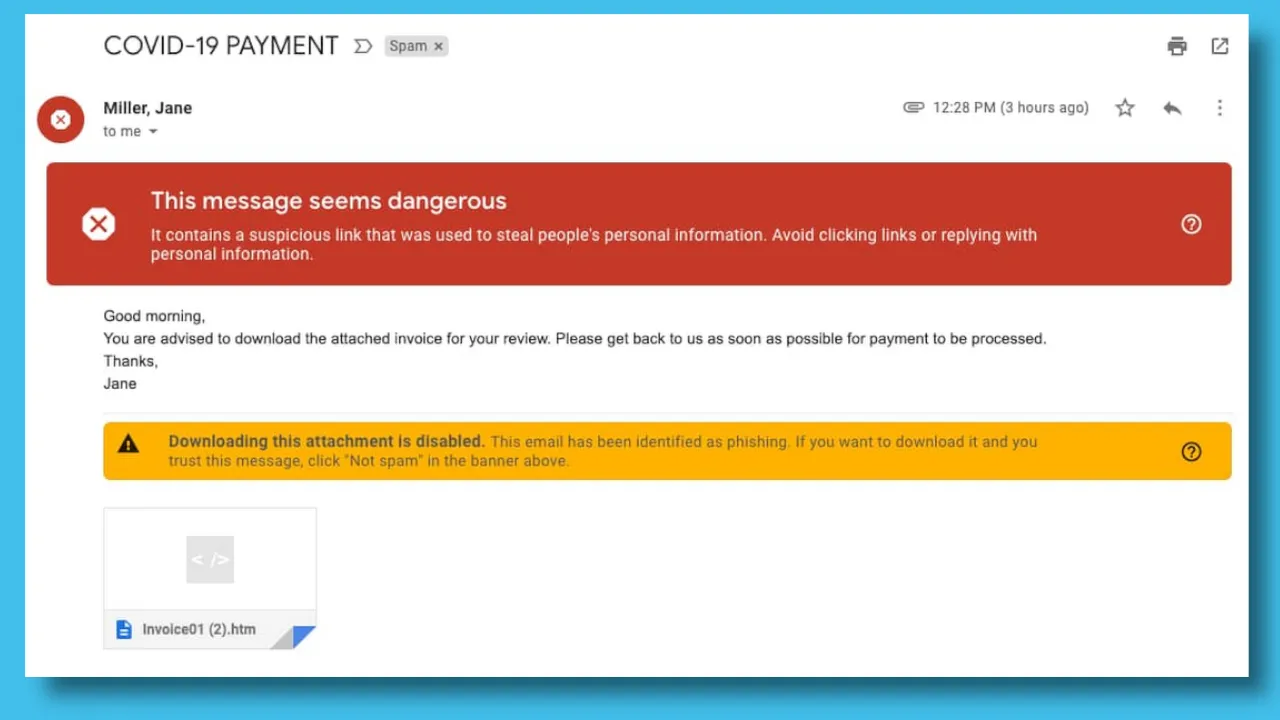

Cybercriminals are using sophisticated phishing emails embedded with hidden AI prompts—not visible to users—that trick email summarizing assistants like Google’s Gemini into generating fake security alerts. These “indirect prompt injection” attacks insert malicious instructions via invisible HTML/CSS (e.g., white-on-white text), which bypass detection and cause automated summaries to mislead recipients—often prompting them to call spoofed support numbers or reveal sensitive data.

Read full story at Cybersecurity News