Thursday, 5 March, 2026

Prompt Injection Attacks Now Bypass AI Agents by Manipulating User Inputs

By Isha

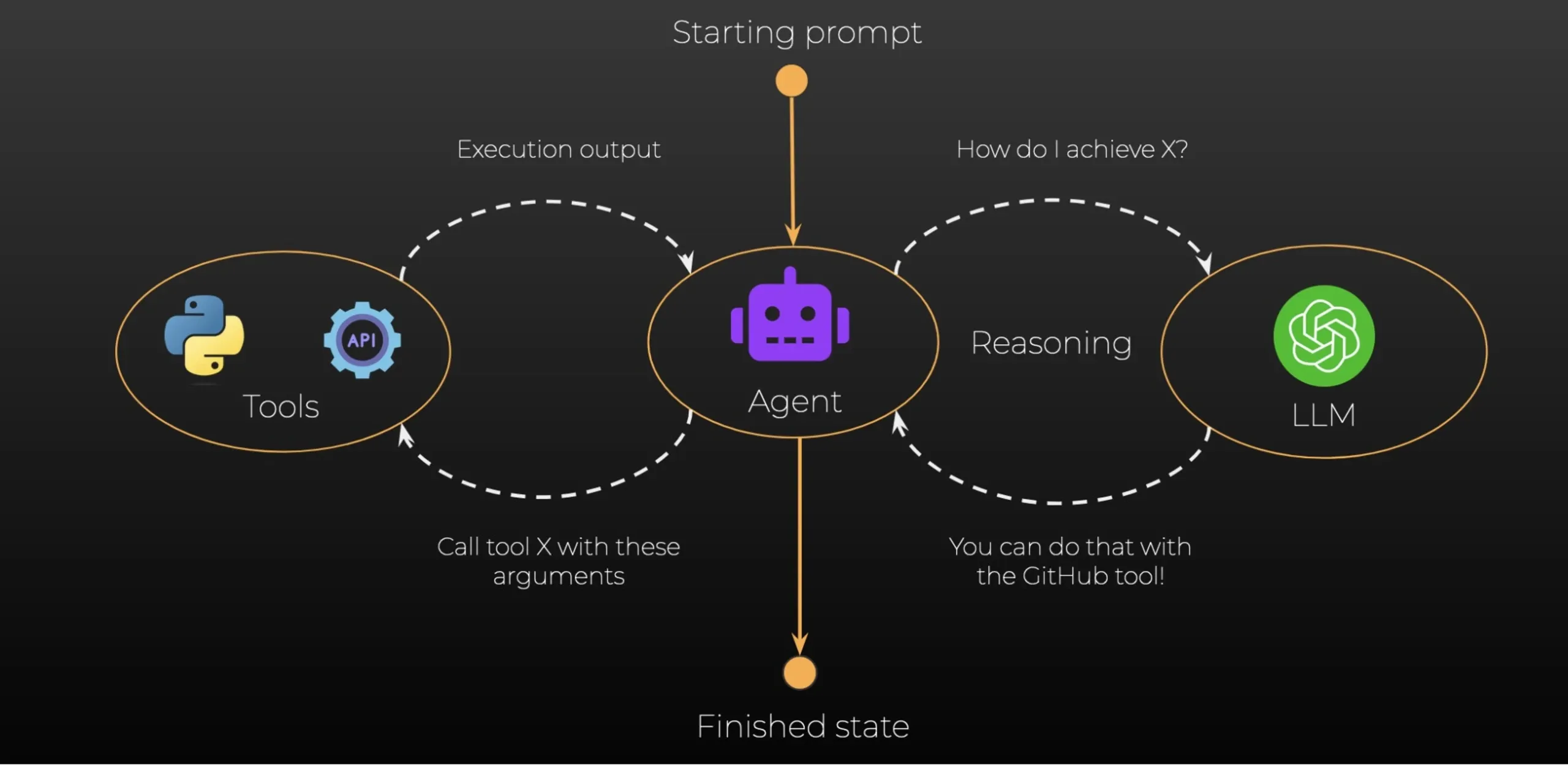

Prompt injection attacks represent a growing and critical vulnerability in AI systems, allowing malicious actors to manipulate AI agents—designed for autonomous tasks—by crafting deceptive user inputs disguised as legitimate commands. These attacks bypass system guardrails by exploiting the inability of large language models to distinguish between system instructions and user-provided input.

Read full story at Cybersecurity News